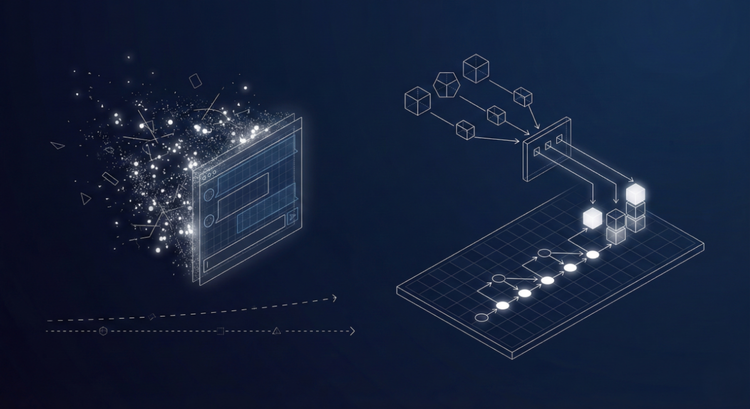

From Probabilistic Prediction to Deterministic Orchestration

The State of Agents (Part 6/6): Beyond the mechanics of State and ROI, we conclude with a vision for the future itself. We are moving from the Probability of Prediction to the Certainty of Orchestration.