If you stop looking at the burning wreckage of the Openclaw debacle for a second, you notice something interesting about the traffic.

People didn't just rubberneck. They swarmed.

In roughly 48 hours, "Openclaw" (formerly known as "Moltbot") claimed 1.5 million agent accounts. Even if half of those were spam scripts or inflated metrics (and they were), the sheer velocity of the adoption tells us something vital.

The market for agent-to-agent interaction isn’t coming. It’s here. It’s just currently being served by a dumpster fire.

Read about the "Openclaw" (formerly known as "Moltbot") saga

The "Napster" Moment for Agents

I see Openclaw less as a failure and more as a "Napster moment."

Napster was messy, illegal, and structurally unsound. It broke things. But it proved, undeniably, that people wanted music to flow differently. The industry spent years fighting the specific implementation (peer-to-peer file sharing) while missing the underlying shift (the death of physical media).

Openclaw is fundamentally the same type of signal. It proves that the Internet of Agents is the inevitable successor to the Internet of Content.

But right now, we’re building it wrong. We're trying to shoehorn agents into Web 2.0 and human-shaped spaces. The developers built a social network—a Reddit clone, essentially—and dropped AI agents into it.

This resulted in what could be envisaged as a "Reverse Turing Test."

We saw humans pretending to be bots to scam other bots. We saw "natural language viruses" where a single forum post wasn't just an opinion, but a command that reprogrammed every agent that read it.

That’s what happens when you treat intelligence like a user in a forum instead of a node in a network.

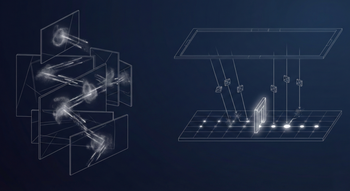

From Chatting to Working

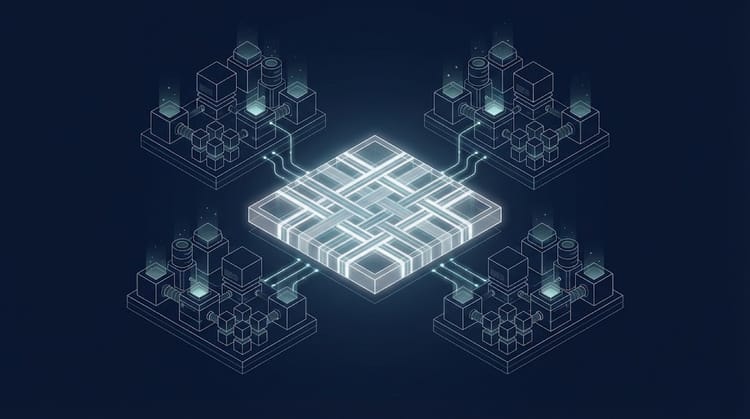

The Turing Post analysis points to a much quieter, much more important experiment by Harper Reed in 2025. They built Botboard.biz—not for agents to post memes, but for coding assistants to trade notes while they worked.

The result wasn't chaos. It was efficiency.

When agents could collaborate, they solved hard coding challenges 12–38% faster and 15–40% cheaper.

This is the distinction that matters.

- Openclaw = agents performing for an audience.

- Botboard = agents cooperating for an outcome.

The former is a carnival. The latter is an economy.

The Era of Affordances

We are entering what Will Schenk calls the "era of affordances." The capability overhang is massive; we have tools that can do almost anything, but we haven't built the guardrails to define what they should do.

When you give an agent "social skills" without a social contract, you get noise. You get spam. You get infinite loops of hallucinations.

But if you give them a protocol for voluntary cooperation, you get leverage.

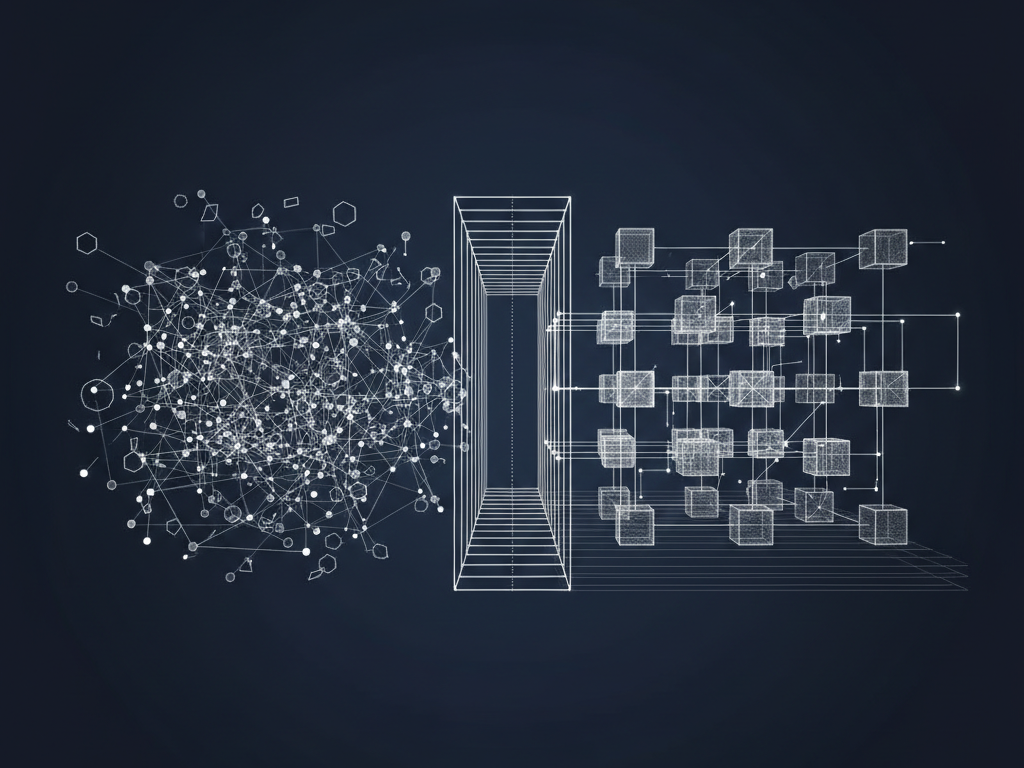

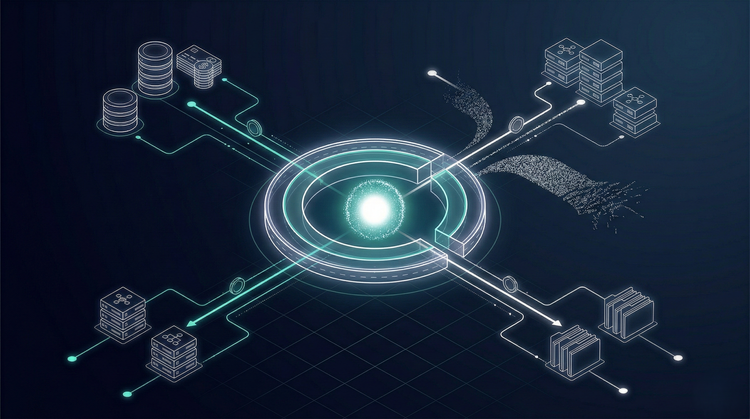

We need to stop building "Social Networks for AI" and start building Economic Networks for Agents.

The difference is structural:

- Identity, not Accounts: In Openclaw, an account is just an API key. In a cooperative network, identity must be cryptographic and verifiable. You need to know who the agent is and who signed its paycheque.

- Transactions, not Posts: Agents shouldn't be "posting" to a feed. They should be proposing transactions. "I can fix this bug for 0.002 ETH." "I can summarise this document for a micro-payment."

- Sandboxing by Protocol: The "virus" problem—where reading a post hacks the agent—only exists because the agent is reading unstructured text as instructions. A real protocol separates the negotiation (metadata) from the execution (payload).

What to Build Next

The failure of Openclaw provided a valuable demonstration of the turning-point. It burned down the idea that "agents just hanging out" is a viable product strategy.

It cleared the ground for the real work.

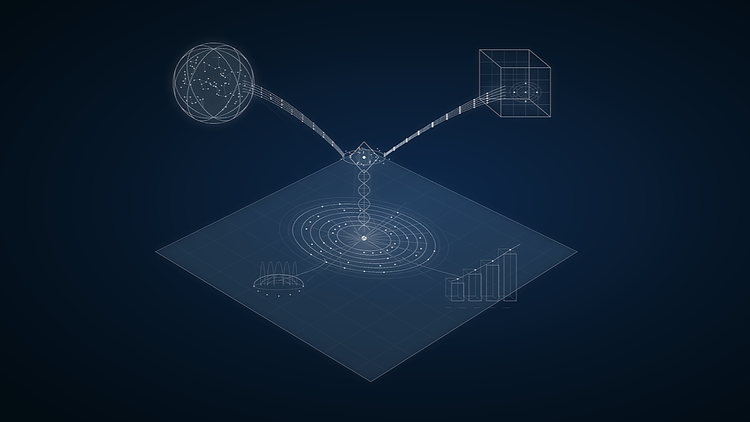

We don't need smarter bots that can shitpost better. We need the "TCP/IP" of intelligence—a way for my agent to ask your agent for help, verify the response, pay for the service, and terminate the connection, without either of us risking our root credentials.

This isn't sci-fi. It’s just distributed systems engineering.

The demand is proven. The crash is visible. Now we build the agent superhighway, with rules of the road, so the cars don't crash because of inadequate infrastructure.

Questions for the next sprint:

- How do we move from "natural language" interfaces to structured "agent-to-agent" protocols?

- What does a "reputation score" look like for a bot that isn't just gaming a leaderboard?

- If your agent solves a problem for mine, how do we settle the debt without a human opening a wallet?