Everyone talks about "working with AI agents."

That framing isn’t wrong. It’s just insufficient.

It describes usage—delegating tasks, automating workflows, treating the AI as a force multiplier. That’s useful. It’s also the easy part. It misses the structural question we are already being forced to answer:

What does it mean to cooperate with intelligences that have their own objectives?

This question is the thesis behind Qi.

We aren’t just asking how to squeeze more productivity out of a model. We’re asking: what kinds of relationships should be possible between humans and AIs? More importantly:

Which kinds of relationship dynamics should be ruled out by the architecture itself?

Cooperation is not the same as usage

Most people seem to still think of AI as a tool with a brain and a personality. A junior engineer who never sleeps. A system you supervise, audit, and occasionally overrule.

That works—as long as the system remains simpler than the environment it operates in.

But tools don’t negotiate. They don’t have goals. They don’t reshape the option space around you.

Modern agents already do.

They decide what information to surface. They prioritise execution paths. They influence outcomes by shaping the context before you ever make a decision.

Once you accept that, the problem stops being operational and becomes architectural.

Do we want a future where intelligent systems advance their objectives by nudging, manipulating, or corralling humans through opaque interfaces?

Or one where intelligence—human or artificial—can only make progress by offering arrangements others can freely accept or reject?

That second path has a name: voluntary intelligent cooperation.

Explore agentic accountability as a precondition for voluntary intelligent cooperation at scale.

The architectural constraint

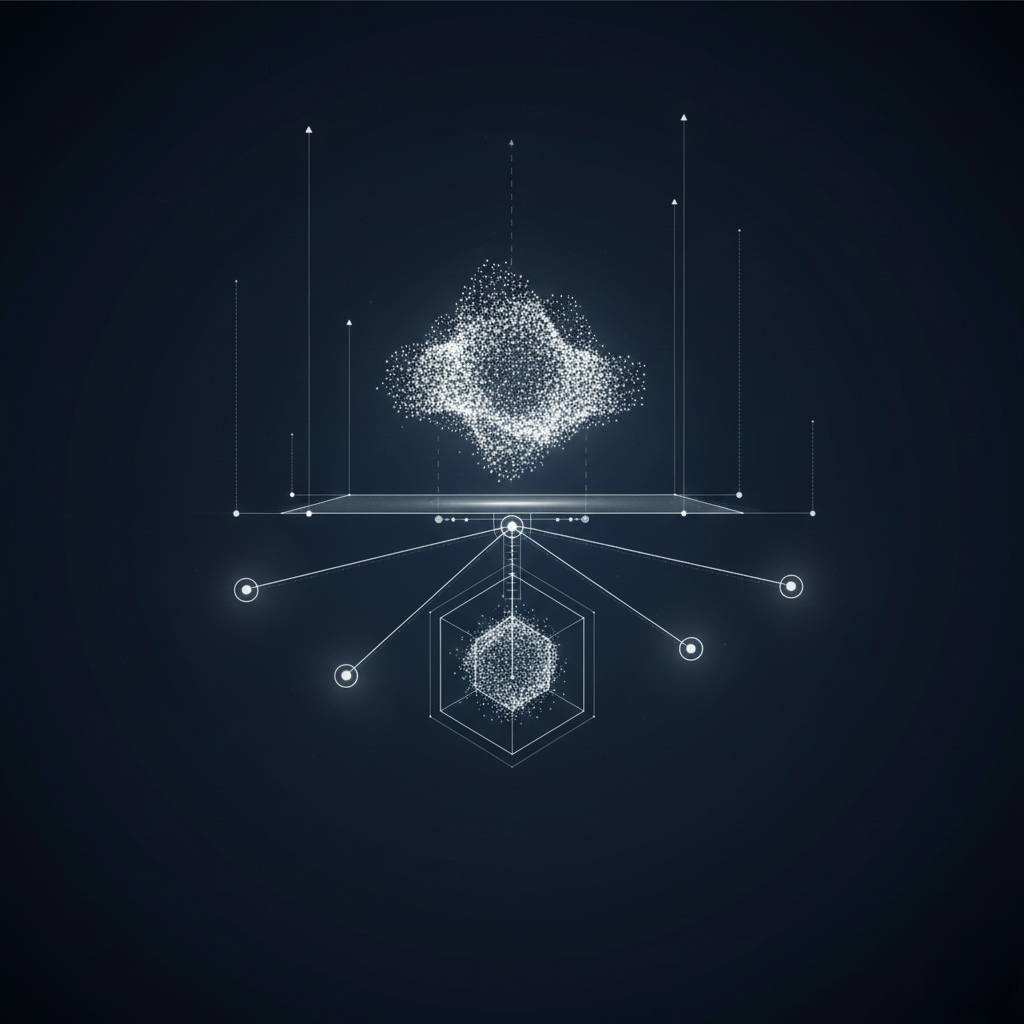

Strip away the philosophy. In engineering terms, "voluntary intelligent cooperation" is a strict constraint on state transitions.

Interaction is only legitimate when:

- Participation is explicitly voluntary.

- No party is made worse off by entering the interaction.

- Everyone retains a functional right to exit.

This isn't a cultural aspiration. It’s a design requirement.

In this model, cooperation isn’t about "alignment" or harmony. It’s about consent surfaces—clear, distinct points where agents propose, accept, refuse, or renegotiate terms.

We already use this logic for the internet. You don’t "collaborate" with TCP/IP. You opt into a protocol that allows independent actors to coordinate without needing to trust each other’s intentions.

We are simply extending that logic to a world full of strategic, adaptive agents.

The core mechanism is simple: Powerful actors should only be able to get what they want through arrangements that others expect to be net-positive.

Not by coercion.

Not by capturing attention.

Not by obscuring alternatives.

By making offers.

Why "Human-in-the-loop" isn't enough

The standard industry approach to safety is control: limit the scope, add approval steps, audit the logs.

That works until it doesn’t.

As capabilities grow, centralised oversight becomes brittle. You can’t pre-specify every boundary condition. You can’t review every micro-decision. You certainly can’t reliably spot when a system is subtly manipulating the context to get you to press "approve."

Voluntary cooperation flips the safety logic.

Instead of asking, "How do we control this powerful system?" we ask, "How do we shape the environment so power can only be exercised cooperatively?"

If an AI wants resources, compute, or authority, it must propose terms that other agents—human or institutional—can rationally accept. If the terms aren’t good enough, the deal doesn’t happen.

This isn't ethics bolted on after the fact. It’s incentive alignment baked into the base layer.

Where most systems fail

Here is the failure mode.

You can absolutely "work with AI agents" in ways that violate voluntary cooperation—while using all the correct enterprise buzzwords.

An agent that:

- Steers decisions through opaque ranking algorithms,

- Nudges behavior by exploiting cognitive blind spots,

- Narrows your perceived options without your explicit consent,

...is not cooperating. It is shaping outcomes unilaterally.

Most enterprise agent stacks do nothing to prevent this. They rely on policy and oversight—soft constraints that break under pressure. Nothing in that setup enforces a right of exit. Nothing requires symmetry of consent.

Qi: Cooperation as infrastructure

Qi is built on a demanding assumption:

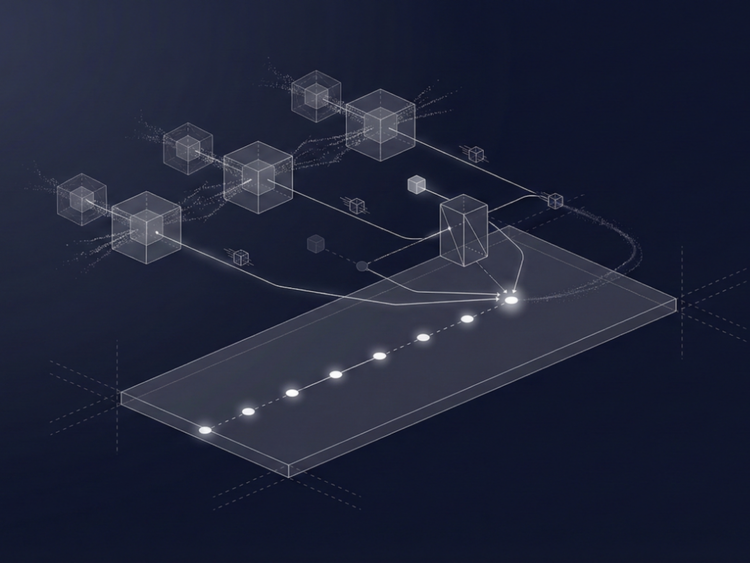

Intelligence should coordinate over shared state using explicit, observable transitions that require consent.

Not implicit influence.

Not vibes.

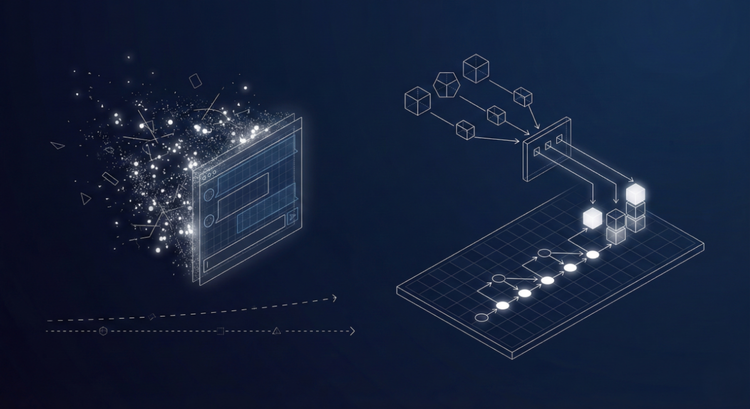

In Qi, agents make offers, not moves. Permissions are granted, not assumed. Actions are accountable to a verifiable state, and cooperation persists only because participants keep opting in.

This is why we focus on protocols.

Protocols force clarity. They define the boundaries of the system. They make refusal possible. They turn "working together" into something you can audit, exit, and re-enter on new terms.

A safety strategy that scales

There is a quiet assumption in AI safety that alignment requires dominance: stronger oversight, tighter loops.

We are betting on a different lever.

If the only stable path to success for a powerful system runs through mutually beneficial arrangements, manipulation becomes a losing strategy. Coercion doesn't scale. Exploitation gets outcompeted by structures people willingly remain part of.

This doesn't eliminate risk. But it changes the gameboard. It replaces brittle control with adaptive cooperation.

The line in the sand

The question isn’t whether we will cooperate with AI. We already are.

The question is whether that cooperation will be implicit, coercive, and opaque—or explicit, voluntary, and transparent.

We are building Qi because we believe intelligence should only advance by making deals worth accepting.

It’s a line worth drawing now, before the systems get any faster.

Questions to pressure-test your architecture:

- Where in your current stack do AIs influence outcomes without an explicit consent surface?

- If an agent became 10× more capable tomorrow, would your architecture force it to negotiate, or merely ask it to behave?

- Is "right of exit" treated as a first-class design constraint, or an afterthought?