Why you cannot fix hallucinations with more hallucinations.

There's a new consensus forming in Silicon Valley: Human-in-the-loop has hit a wall.

The argument, recently articulated by SiliconANGLE, is that humans are too slow, too expensive, and too cognitively limited to oversee the millions of decisions AI agents make per second.

They are right about the problem. The current state of "Human-in-the-Loop" (HITL) is mostly theatre. We force humans to stare at dashboards and click "Approve" 500 times an hour. That isn’t oversight; it’s a rubber stamp.

But their proposed solution of "AI overseeing AI" is a dangerous trap.

They suggest we build layers of "Supervisor AIs" to watch the "Worker AIs." But if your Supervisor AI has no grounding in reality—if it is just another LLM processing text—you haven't solved the hallucination problem. You have just squared it.

You are building a hall of mirrors where hallucinations watch hallucinations.

ICYMI: The State of Agents (Part 2/6) - Why "Self Empowerment" is a consumer fantasy, and true agency requires authority to act.

The Missing Piece: Anchors, not Monitors

At IXO, we anticipated this scaling limit. That is why we have taken a fundamentally different approach to building our Qi intelligent cooperating system.

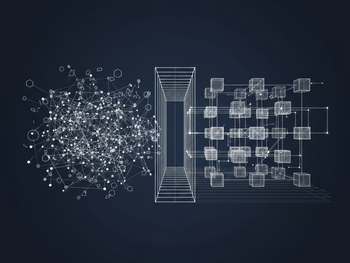

We believe that the answer to the "Human Bottleneck" is not to replace humans with bots. We need to replace Subjective Review with Objective State.

In the Qi architecture, we don't just have "agents." We have Agentic Oracles.

An Agentic Oracle doesn't just "do work." It stakes its reputation on the outcome of that work. When an agent in the Qi network makes a claim—"I completed this transaction" or "I verified this carbon credit"—it isn't asking for a human to read a chat log. It isn't asking a Supervisor AI to check its tone.

It is submitting a cryptographic proof to a shared ledger.

Scaling Trust without "AI Middle-Managers"

Here is how we close the loop without adding more bots:

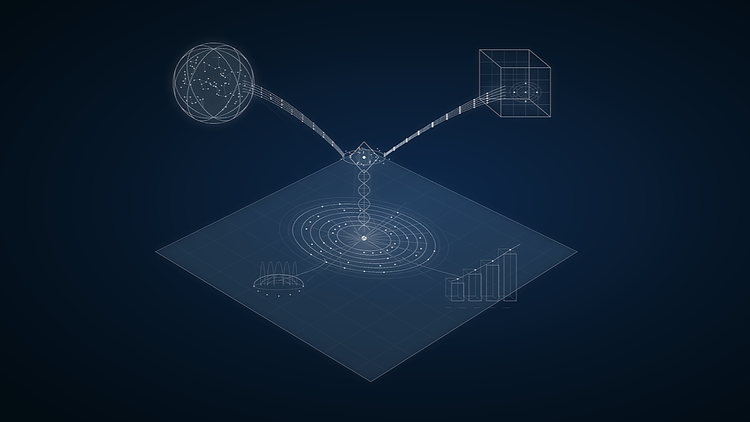

- The Human Defines the Policy: Sets the invariant rules (e.g., "No payment over $10k without 2 signatures"). Delegates authority with explicit intent and within explicitly-encoded object capability scopes. This enables high-leverage work, not rubber-stamping.

- The Agent Executes: The agent performs the task, and if this leads to a significant state-change, submits a verifiable claim.

- The State Verifies: Instead of a "Supervisor AI" loosely interpreting the output, the IXO protocol acts as a deterministic check against a predefined rubric. Did the agent produce the required verifiable credential? Did the state transition match the policy?

If the state matches, the transaction settles. The human never needs to look at it. If the state fails, the transaction reverts and emits an error message.

This is Optimistic Verification anchored in Shared State.

Human on the Loop, not in the Loop

We are moving from a world where humans are "in the loop" (doing the work) to a world where humans are "on the loop" (setting the physics of the system).

But you cannot have "Human on the Loop" if the loop itself is made of "slop"—unverifiable text generated by one AI and checked by another.

You need a ledger. You need a source of truth that neither the Worker AI nor the Supervisor AI can hallucinate away.

"AI overseeing AI" works only if both AIs are accountable to a shared, immutable state.

Without that, you aren’t building an autonomous organisation. You’re building a bureaucracy of bots. And we already have enough of those.

The IXO + Qi Advantage

- Standard HITL: 1 Human monitors 10 Agents. (Bottleneck)

- Silicon Valley's "AI Oversight": 1 AI monitors 100 Agents. (Hallucination Risk)

- Qi's State Oversight: 1 Policy governs 1,000,000 State Transitions. (Scale)

Stop trying to hire AI middle managers. Start engineering better state, with Agent Physics.

In Part 4, we will look at ROI. Why are 84% of CIOs stuck in pilot purgatory? Because they are measuring "Efficiency" when they should be measuring "Impact."