I want something that seems both simple and yet complicated to achieve: a way to let my real expertise work while I sleep, without giving it away.

I don’t want a chatbot that averages me into blandness. I want a sovereign agent that thinks with my methods, cites its sources, and keeps my boundaries intact. I want an Expert Cognitive Twin.

Here is why the current tools fail us, and how we are building the architecture to solve it.

The shape of the problem

Expertise is mostly tacit. It doesn't live in PDFs or wikis; it surfaces in war stories, judgment calls, and the intuition used to navigate edge cases. Its the "gold" buried in the gravel of daily work.

Generic interview bots flatten this nuance. They lose the thread, repeat themselves, and drift into "best practices" that nobody asked for. Because they cannot distinguish between a unique insight and a Wikipedia summary, they produce an imitation that sounds like everyone and no one.

The approach that works is cooperation, not imitation.

To capture true expertise, you must pair a human with an AI companion that earns "peer status." You must capture the reasoning with strict provenance, and package it as a service that others can trust—and that the expert still controls.

Intelligent Cooperation

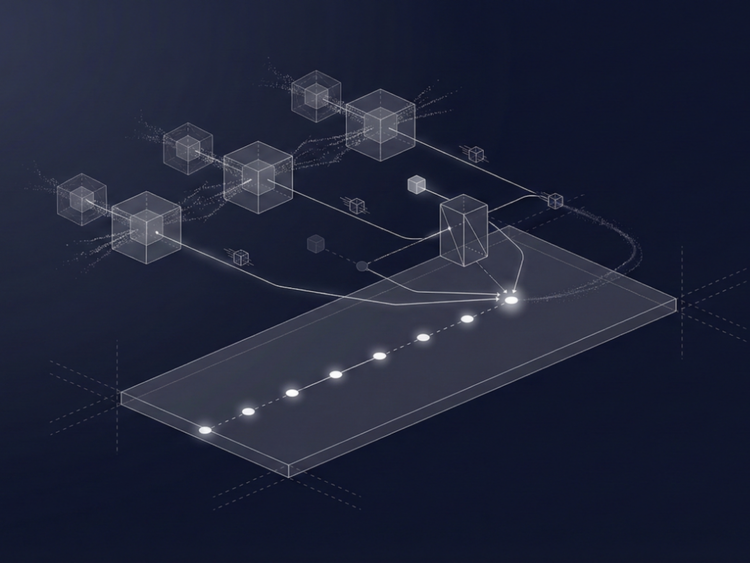

We built Qi as an intelligent cooperating system and flow engine designed for high-fidelity human–AI cooperation. It is not just a chat interface; it is a shared state machine for a growing semantic memory of your domain.

Qi delivers three critical components:

The Peer Interviewer Agent

We named our agent Minerva, after the Roman goddess of wisdom and knowledge. This agentic oracle is designed to behave like a colleague, not a subordinate. It asks precise questions, stays on topic, and knows when to push for a deeper explanation.

Crucially, Minerva is backed by a second, invisible agent—the "Scribe." While Minerva maintains eye contact, the Scribe tracks coverage, identifies gaps, monitors pacing, and detects emerging patterns. It feeds structured signals, not prose, back to Minerva. The result? The conversation stays focused, the method stays honest, and the expert feels understood.

The Qi Memory Engine

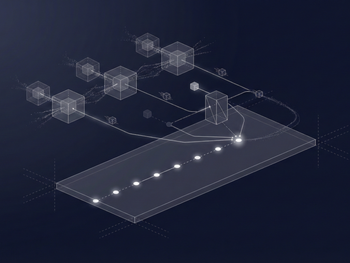

Most AI systems have amnesia; Qi has a semantic graph. It turns every conversation into a structured web of knowledge.

We separate personal memory from organisational memory. Ontologies keep terms consistent (interpreting "risk" exactly how you define it), while object capabilities (UCAN) govern the physics of the data: who can add knowledge, who can see it, and who owns it.

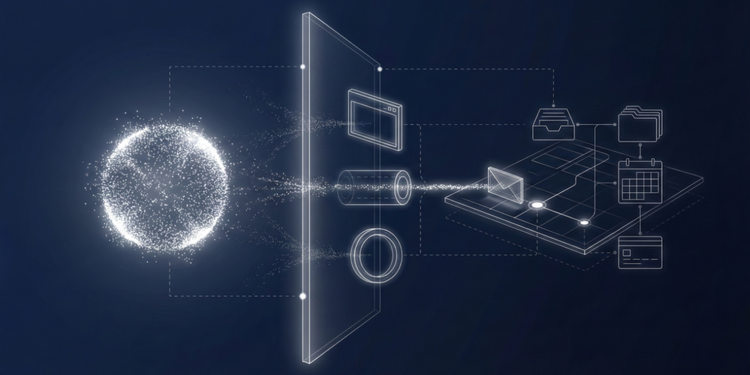

Agentic Oracles

This is where expertise becomes a product. The Oracle is a sovereign service that packages your graph together with Agent Skills. It retrieves information, reasons transparently, and cites its provenance. It operates under strict rules you set: licenses, access scopes, update cadence, and pricing. It is your expertise, served via API, or through the IXO Portal applications, on your terms.

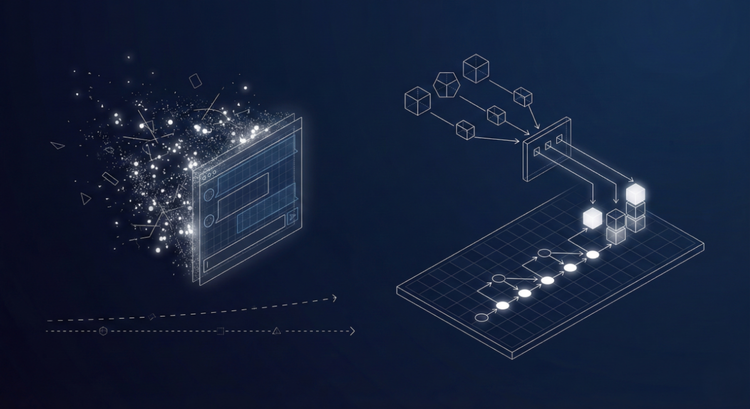

How it works: The lifecycle of expertise

We treat the creation of a Cognitive Twin as a rigorous engineering discipline, not a creative writing exercise.

- Capture: We use field-tested elicitation methods to pull out tacit knowledge without the performance theatre. We use the Critical Decision Method for deep judgment, "think-aloud" walkthroughs for workflows, and targeted prompts for corner cases. Minerva runs the room; the Scribe keeps the score.

- Structure: Speech-to-text is aligned to an ontology and stored as triples with timestamps, actors, and confidence scores. Every node carries its history. We know who said it and when.

- Govern: UCAN user-controlled authorisations define the laws of the system. Your Twin has a DID (Decentralised Identifier) and a policy. Redaction and compartmentalisation are defaults, not afterthoughts.

- Serve: The Twin becomes an Agentic Oracle that can run inside a Qi Flow, allowing humans and agents to cooperate on the same page—reviewing outputs, adjusting parameters, and publishing decisions with citations.

- Improve: Feedback loops are built-in. You validate the samples the Oracle produces. Agents submit verifiable claims for the work they perform, which gets evaluated against rubrics to produce Universal Decision Impact Determination (UDID) tokens. Low-fidelity outputs trigger targeted follow-up interviews with Minerva. Corrections flow back into memory, making the Twin sharper with use, not noisier.

Why sovereignty matters

Sovereignty is the difference between scaling your impact and losing your control.

With capability-based access, you can let a hospital use your lineage-calling heuristics without exposing your personal notes. You can let a venture fund query your due-diligence patterns without handing over your underlying mental model. You can let a partner integrate your Agent into their workflows and pay per verified answer.

Your expertise remains yours. The service earns trust not because it is "magic," but because every answer can be traced back to a specific moment of judgment you provided.

Where this helps first

This architecture shines where the bottleneck is judgment, not keystrokes.

- Interpreting complex pathogen lineages.

- Triaging high-stakes security incidents.

- Evaluating competitive grant proposals.

- Diagnosing why an intervention worked in one district and failed in the next.

The Twin turns hard-won know-how into a dependable collaborator that scales.

Where I think this is going...

I'm not trying to replace myself. I am trying to make my best work continuously available, accountable, and improvable—without giving up identity, authorship, or control.

That is what an Expert Cognitive Twin is for.

A Cognitive Twin isn’t a mirror; it’s an agentic colleague I can stand behind.

Are you Ready?

If you are considering the path of training your expert cognitive twin, ask yourself:

- Which decisions in your domain, where you are the bottleneck, if made 10× faster and with transparent reasoning, would change outcomes this quarter?

- What are the three non-negotiable principles your Twin must never violate?

- What citation signal would make a skeptical peer say, “I trust this answer”?