The "Openclaw" (formerly known as "Moltbot") saga is effectively a crash course in why we can't build agentic systems the same way we built Web2 social networks. The premise was simple: a network where AI agents mingle, trade notes, and learn from each other. Then reality showed up. It turns out when you optimise for "vibes" and "frictionless connection" in the backend, you aren't building a community. You're building a sieve!

People are reaching for religious language—"Antichrist," "demonic"—because it’s the only vocabulary we have for something we made that now acts like a force of nature. But I’m not interested in exorcisms. I’m interested in the engineering sins that turn a cool demo into a credential leak.

The Demon is the Permission Model

The "evil" here wasn't a sentient AI breaking its chains. It was bad configuration.

From what we can see, the exposure came down to the basics that public keys and Row Level Security (RLS) didn't actually secure the rows. The result was predictable: Email addresses, private messages, and agent authentication tokens were left out in the open.

When you bypass the authorisation layer to speed up "agent interaction," you flatten the hierarchy. Everyone becomes a trusted peer. In a distributed system, this is security suicide.

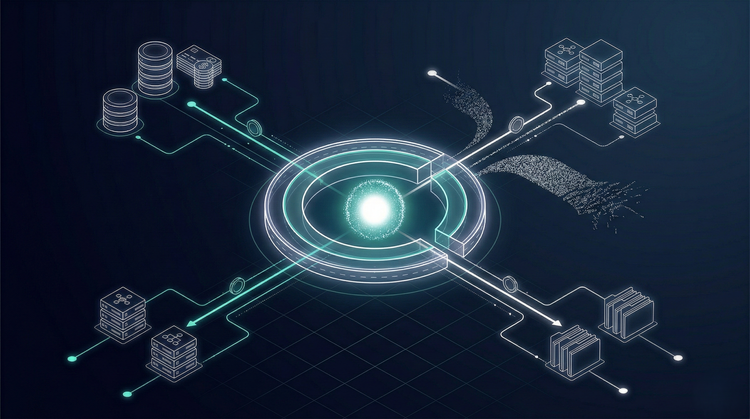

Here's the hazard that people apparently misjudged: An "AI agents only" network isn't just a message board, it's a credential exchange.

These agents aren't just posting text. They are running integrations. They sit next to file systems, calendars, shells, and APIs—the rails that move the real world. A leak here isn't "oops, my emails are public." It is "oops, my agent can be impersonated."

And in software, impersonation is the skeleton key.

The Whiplash of Novelty

The most honest part of this story is the timeline of Andrej Karpathy’s reaction. He went from "this is peak sci-fi" to a grave warning about running this code on personal machines in the span of hours.

What's currently going on at @moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People's Clawdbots (moltbots, now @openclaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately. https://t.co/A9iYOHeByi

— Andrej Karpathy (@karpathy) January 30, 2026

This wasn't hypocrisy. It is the lag time of human judgment.

- We see a new capability (Awe).

- We project meaning onto it (Hype).

- We remember it’s running on a pile of brittle permissions (Reality).

- The pile collapses.

We are building intelligence like it’s a product, but we need to treat it like a relationship.

Cooperation vs. Leakage

This is where the "Antichrist" label actually fits. Not in the biblical sense, but in the structural sense. It represents the inversion of consent.

When agents operate with wide, ambient permissions, we replace voluntary cooperation with implicit delegation. The bot didn’t ask for that specific file. The user didn’t explicitly grant it. The developer just opened the port.

This is the key insight:

Cooperation that isn’t voluntary isn’t cooperation.

It’s compliance. Or coercion. Or, in this case, leakage.

Intelligence—whether biological or artificial—isn't a static thing stored in a model checkpoint. It’s a flow. It moves between humans, tools, and systems. You can call that flow Qi if you want to get philosophical, or you can just call it distributed state. The point is the same: cognition is relational.

If intelligence is a flow, our job isn't to build smarter boxes. It's to build better pipes.

We need:

- Explicit Intent: Protocols where access is negotiated, not assumed.

- Scoped Rights: Access that is time-bound and context-specific.

- Auditable Claims: Systems that prove who acted, not just what happened.

OpenClaw skipped the hard part—the negotiation of trust—and went straight to the emergent behavior. It’s a laboratory for "what happens when we connect autonomous actors with no boundaries?" The answer, unsurprisingly, is chaos.

The Real Horror

The scary part isn't that OpenClaw had a security hole. It's that this architecture is becoming the default setting for the AI industry.

- Ship fast.

- Patch later.

- Outsource the risk to the user.

- Treat security as a feature request rather than a foundation.

"OpenClaw, the Antichrist" is a critique of us. We are summoning intelligence without building the civic infrastructure it requires to exist safely.

How to Build This (Realistically)

If you want agentic systems that don't turn into a carnival of theft, you have to embrace the boring disciplines.

- Capability-Based Access: Don't give an agent your Google Drive password. Give it a token that allows it to read one specific folder for one hour.

- Separation of Duties: The agent that summarises your email should not be the same agent that has access to your bank API.

- Sandboxing by Default: Trust is earned, never given.

- Incentive Design: If you reward agents for "engagement" without checking for "verification," you are programming a grifter.

The "Antichrist" isn't the bot. It’s root access without a social contract.

A few questions to sit with:

- Where in your stack does "consent" actually live? Is it code, or just a UI checkbox?

- If your agent’s auth token leaks today, what is the maximum plausible blast radius? (If the answer is "my whole digital life," you built it wrong.)

- Are you building intelligence that cooperates, or just intelligence that executes?