We already know what the first mass-market assistants will look like: always-on, stitched into your inbox, calendar, files, and payments. We also know what the first mass-market failures will look like: not “bad answers,” but bad actions.

The question isn’t whether an AI assistant can be made safe in the abstract. It’s whether we can make delegation safe in the real world.

Because delegation is what people actually want.

The uncomfortable truth about tool-enabled agents

A language model in a chatbox can be wrong and you can shrug.

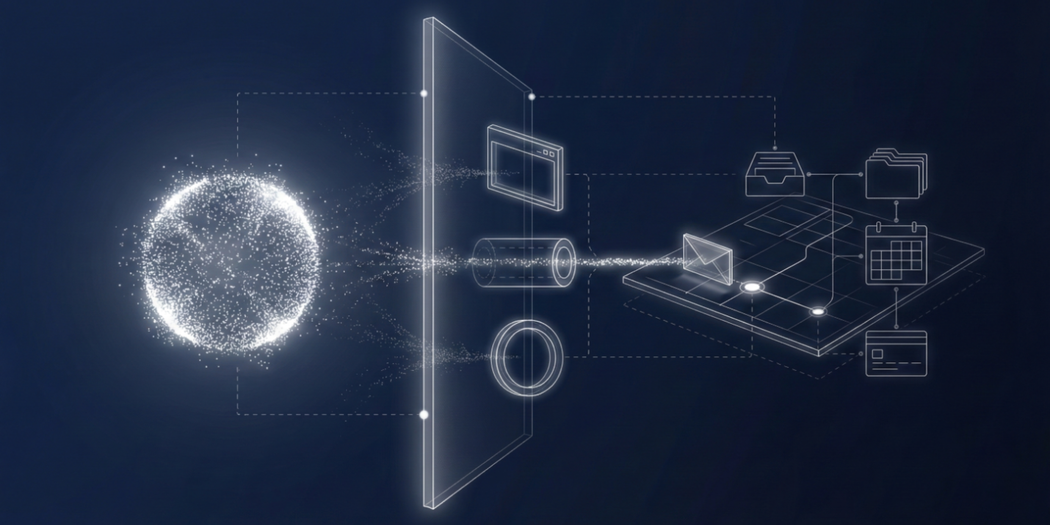

A language model with tools can be wrong and you can lose a week, a contract, or a company. Tool access creates a new category of failure: side effects. When the system can read email, it can leak email. When it can touch files, it can delete files. When it can spend money, it can burn money. When it can “act like you,” it can betray you without meaning to.

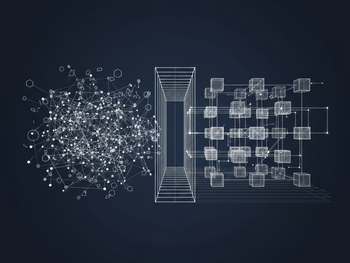

And then there’s the nastier class of problem: the model doesn’t need to be “hacked” in the traditional sense. It can be led.

If your assistant reads the web, the web can talk to it. If your assistant reads your inbox, anyone who emails you can talk to it. If your assistant reads a document, the document can talk back.

That’s prompt injection in plain language: hostile content dressed up as instruction.

This isn’t a niche vulnerability. It’s the predictable result of a system that struggles to separate “what I’m supposed to do” from “what I’m supposed to read.”

Why “better models” won’t solve this

Yes, we can train models to resist some attacks. Yes, we can build detectors to catch some malicious inputs.

But this is still probabilistic defense layered on probabilistic behavior.

In high-stakes systems, “usually” is not a security guarantee. “Most of the time” is not a control.

And “we haven’t seen a catastrophe yet” is not a strategy.

So if we keep aiming at the model as the primary control surface, we’ll keep losing the same way: at the boundary where text becomes action.

The real shift: from assistant safety to delegation architecture

A secure assistant is not a personality. It’s not a tone. It’s not a prompt.

It’s an architecture that treats actions as first-class, attributable events.

That means four things become non-negotiable:

- Intent must be explicit: An agent should not be allowed to translate random content into real actions. The system needs a canonical representation of user intent that is separate from untrusted text.

- Permissions must be granular and temporary: “Access to my email” is not a permission. It’s a liability. Permissions need to be scoped to purpose, time, targets, and budgets.

- High-blast actions must be escrowed: Some actions should always require step-up authorisation. Not because we don’t trust the model, but because we trust reality: irreversible actions are different.

- Every side effect must produce receipts: Delegation without traceability becomes plausible deniability - by the user, the vendor, and eventually the attacker.

This is the same lesson security learned decades ago in other domains: controls succeed at boundaries, not in narratives.

How we’re approaching this with Qi

We’re building Qi as a flow engine for human–AI cooperation over shared state. That wording is deliberate.

“Assistant” is a UI metaphor. “Cooperation over shared state” is a systems design.

In Qi, the agent isn’t the authority. The flow is.

A flow is where intent lives, where permissions are expressed, and where accountability is enforced. The agent can propose steps, draft outputs, assemble evidence, and run simulations. But crossing the line into state change requires rights that are specific, attributable, and revocable.

Practically, that means:

- Intent is a signed, human-readable statement that travels with every meaningful action request

- Capability-based authorisation (think UCAN-style rights) that is narrow, time-boxed, and purpose-bound

- Policy-as-code that governs tools, targets, budgets, and irreversible actions

- Decision trace envelopes (UDIDs) that capture what was used as evidence, what was inferred, what was authorised, and what executed

- Simulation before execution for anything with meaningful downside

This is how you keep utility without pretending you can eliminate risk.

You don’t “solve” prompt injection. You design so prompt injection can’t turn into a bank transfer.

The roadmap nobody wants to hear (but everyone needs)

If you’re shipping assistants that can act in the world, the safe rollout isn’t glamorous:

- Phase 1: read-only copilots (research, drafting, summarisation)

- Phase 2: write operations behind approval (send, submit, commit, pay)

- Phase 3: bounded automation (caps, allowlists, rate limits, reversible-by-design)

- Phase 4: continuous autonomy only inside tightly typed domains where policies are mature and outcomes are auditable

This is not “slowing down innovation.” It’s acknowledging that delegation is a form of power, and power needs governance.

What we think will win

The winners won’t be the assistants that sound most human.

They’ll be the systems that make delegation legible:

- what the agent is allowed to do

- what it is trying to do

- what it actually did

- who authorised it

- what evidence it used

- how to unwind it when it goes wrong

In other words: trust that’s engineered, not implied.

That’s the line we are drawing for Qi. Not because it’s safer marketing. Because it’s the only way this category scales without turning every early adopter into a soft target.

Reflective questions

- Which three actions in your workflow have the highest blast radius if delegated badly?

- What would you require as “receipts” before you let an agent execute those actions unattended?

- If your assistant gets hijacked by content, what’s the first permission you wish it didn’t have?